A couple weeks ago, Scott Alexander wrote “Toward a Bayesian Theory of Willpower”. This is my recap of the theory, my tentative verdict, and what I think it means for Beeminder and motivation hacking more generally.

Let’s start with defining terms! Akrasia means failing to do something you rationally want to do. Not just something you “should” do. Something you genuinely want to do, and can do, yet somehow fail to do. Akrasia is the name for that “somehow”. Willpower means overcoming that problem via pure introspection.

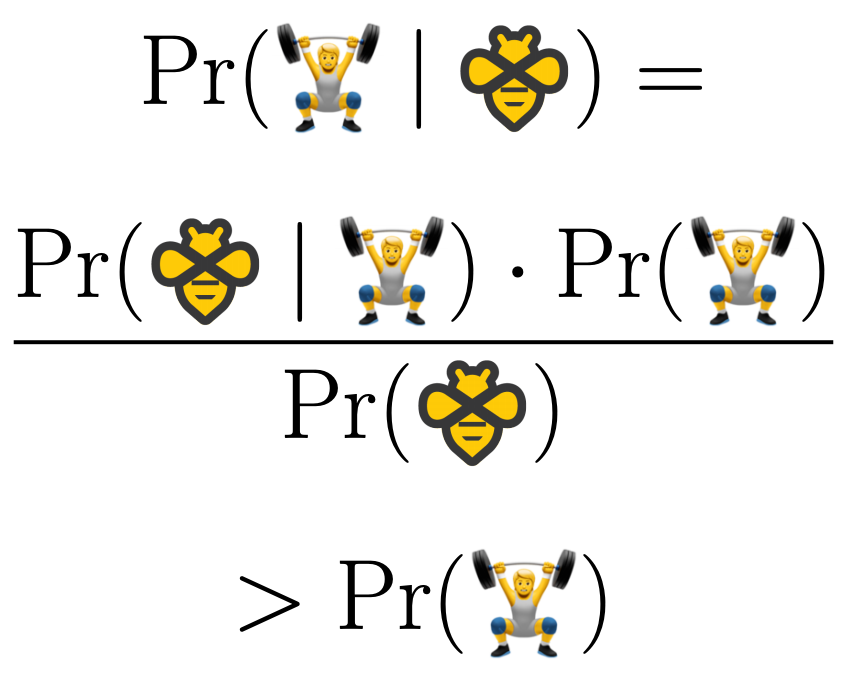

Scott Alexander’s theory says that your brain collects and weights evidence from different mental subprocesses to determine every action you take. The three subprocesses are:

- Do nothing

- Do what’s most immediately rewarding

- Do what you consciously deem best

These all submit evidence via dopamine to your basal ganglia and the winner determines what action you take. There’s a high prior probability on “do nothing” being best, which can be overridden by high enough anticipation of reward, which can be overridden by high enough evidence from your conscious mind.

In this theory, akrasia — Scott Alexander says “lack of willpower” — is an imbalance in these subprocesses. Physiologically maybe that means insufficient dopamine in your frontal cortex such that the evidence from your conscious brain is underweighted. (Dopaminergic drugs seem to increase willpower so I guess that argues in favor of the theory? I’m so out of my depth here.) Hacking your motivation would mean increasing the evidence supplied by your intellectual/logical brain.

Hyperbolic Discounting

Many decades before that, various psychologists and economists came up with a dynamic inconsistency / hyperbolic discounting theory. Your brain does whatever maximizes the net present value of expected future utility (i.e., you figure out the optimal thing and then do it) except your future-discounting is broken. That’s the akrasia. You over-weight immediate consequences.

Everything Is A Nail?

Under hyperbolic discounting my answer is “use commitment devices to bring long-term consequences near!”. Under the Bayesian theory, my answer is “use commitment devices to drive up the weight on the intellectual evidence!”. This is suspiciously convenient coming from me, speaking for Beeminder.

But if the answer is the same either way, then what good actually is the Bayesian theory? Does it have any practical applications that we don’t already have with the hyperbolic discounting theory? Is it all just a just-so story with neuroscience window dressing?

My claim is that from Beeminder’s pragmatic perspective all theories of akrasia converge where the rubber meets the road. Hyperbolic discounting says you over-weight immediate consequences. The Bayesian theory says you over-weight the evidence from your pure reinforcement learner — which only cares about immediate consequences. We can construct other theories. Take Kahneman’s “Thinking Fast and Slow”: your System 1 takes precedence in your decision-making. Every conceivable theory (er, that I can conceive of) involves over-weighting immediate consequences in one way or another.

UPDATE: At this point in the originally published version of this post we went on a fascinating tangent about how Beeminder is all about commitment devices but that really we can generalize that to… Incentive Alignment. We liked the point (and the term) so much we decided it should be its own blog post. But you don’t need to go off on the tangent now. The concept of commitment devices is pretty general but it always involves the interplay between short-term and long-term incentives. Let’s stay focused on Scott Alexander’s theory!

Scott Alexander’s description of what’s going on at the neurological level is fine (unqualified as I am to judge it) but in terms of what it means for motivation hacking, I don’t see a difference. Maybe it’s like how relativity is more correct and general than Newtonian physics but at human-scale Newton suffices. The Bayesian theory of willpower may be more correct and general than hyperbolic discounting, I’m not sure, but for all practical motivational purposes they prescribe the same thing.

All that said, if you have any approaches to aligning your own incentives — upping the weight of your intellectual evidence — that only make sense in the Bayesian paradigm, I want to hear about them!

Thanks to Adam Wolf, Bee Soule, and Michael Tiffany for reading drafts of this.