This is part 1 of a two-part series. First we explain loss aversion and how it’s distinct from the endowment effect. (Spoiler: loss aversion is a generalization of the endowment effect.) Asking Google how those things are different currently yields a fog of opaque logorrhea, so we hope this is enlightening. We’ve also collected some cute examples to convince you how irrational loss aversion is.

Part 2 might be embarrassing. In the past we’ve talked up how we harness loss aversion for productive motivation. Tune in next time for why we’re disavowing that.

The endowment effect is like a “but it’s miiiine” bias — an irrationally increased value for the feeling of owning something. Not that valuing anything — even (especially) vague feelings — is necessarily irrational. It’s only irrational if you’re inconsistent. The most familiar example of irrational/inconsistent preferences may be addiction, where you want something but you don’t want to want it. The endowment effect is a bit like that.

Imagine you’d pay up to $100 for a snozzwobbit. So your value for it — including the warm fuzzy feeling of owning it — is $100. That means losing the snozzwobbit — and the concomitant warm fuzziness — should drop your utility by that same $100. If not, then something fishy has happened.

Of course there are a million ways to rationalize such asymmetry. Maybe you didn’t know how much you’d like the thing until you experienced it, or you just don’t want to deal with transaction costs or risk of getting scammed. But if we control for all those things — and many studies have tried to do so — we can call the asymmetry the endowment effect.

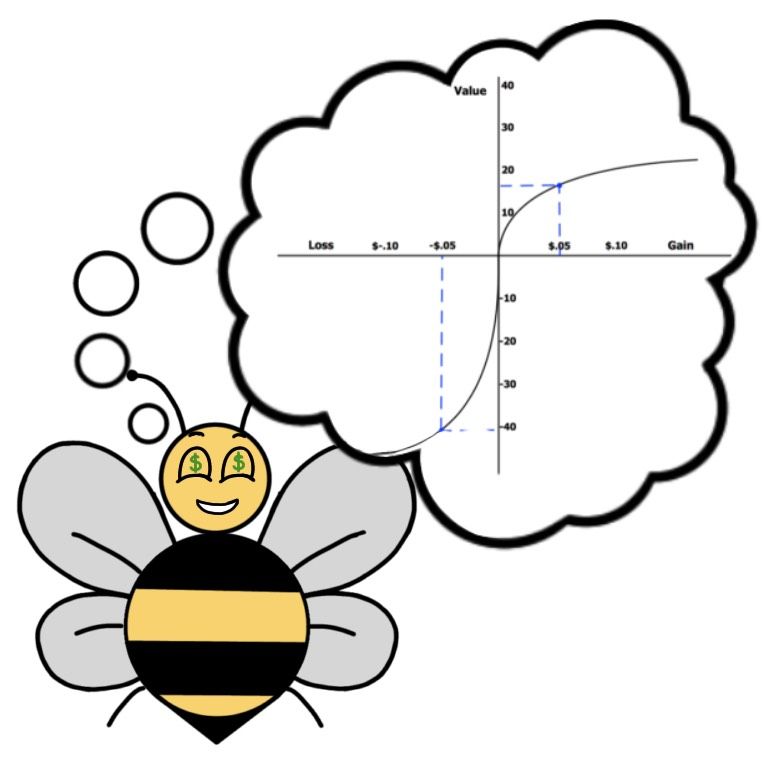

Loss aversion is more general. Technically it means having an asymmetric utility function around an arbitrary reference point, where you think of a decrease from that reference point as a loss. Again, the weirdness of a utility function isn’t itself irrational. You like what you like! But the arbitrariness of the reference point can yield inconsistencies which are irrational. You can reframe gains and losses with a different reference point and people’s utility function will totally change.

Consider the Allais paradox. Imagine a choice between the following:

- A certain million dollars

- A probable million dollars, a possible five million dollars, and tiny chance of zero dollars

People mostly prefer the certainty, which is entirely unobjectionable. [1] Now imagine this choice:

- A probable zero dollars and possible million dollars

- A slightly more probable zero dollars and slightly less possible five million dollars.

Now people feel like they might as well go for the five million. And — here’s the paradox — you can put numbers on those “probables” and “possibles” such that the choices are inconsistent. Rationally, either five million is sufficiently better than one million to be worth a bigger risk of getting nothing, or it’s not. But it doesn’t feel that way. In the first choice you can guarantee yourself a million dollars. You don’t want to risk losing that by taking option 2 and ending up with bupkis. In the second choice, neither option has any guarantee. You’re choosing between different possible gains with different probabilities.

Thus is your reasoning distorted.

If you’re talking about your utility for snozzwobbits then the obvious reference point is the number of snozzwobbits you currently own. If your utility for an additional snozzwobbit is much less than your disutility for giving up one of your snozzwobbits, that’s suspicious. Still not inherently irrational; maybe you have just the number of snozzwobbits you need and one more would be superfluous. But if we see that same asymmetry — how much you’d pay for an additional snozzwobbit vs how much you’d sell one for — regardless of how many snozzwobbits you own, that’s irrational.

So there you have it. The endowment effect is a kind of loss aversion where your arbitrary reference point — as in your value for snozzwobbits — is however many you currently own. And the Allais paradox example shows that literal endowment/ownership isn’t required for this cognitive bias to appear.

Let’s drive this home with some less abstract examples.

Garage Space

Here’s a partially real-world example, courtesy of my sister.

World A: Your car doesn’t fit into your garage. By spending $1000 you can have the garage widened or something. No way, you say, we’ll just keep the car outside.

World B: Your car does fit in your garage and you’re offered $1000 to have it not fit anymore. Maybe your neighbor offers you that to store their car in your garage. No way, you say, can’t give up that garage space.

But those are inconsistent. What’s the value to you of having your car fit? If less than $1000 then you were right in World A but wrong in World B. If more than $1000 then it’s the other way around. There’s no good way to rationalize saying no in both worlds, but people do.

I say “no good way” which invites a lot of nitpicking and well-actuallying. There are uncertainties to account for, or social dynamics with your neighbor, blah blah blah. But when the discrepancy between willingness-to-pay and willingness-to-accept is big enough, it’s pretty suspect. A factor of two seems to be common in some experiments.

Lawn Mowing

You know how people will mow their own lawn or change their own oil because it seems extravagant to pay someone $20 to do something they can perfectly well do themselves? But if a neighbor offers to pay you $20 to mow their lawn then you say no. That’s inconsistent! I mean, at some point you’d run out of time but if it makes sense to turn down the neighbor’s offer to mow your lawn then you should also accept when your neighbor offers $20 to mow theirs. Either it’s worth $20 to get out of a lawn mowing or it isn’t.

And, again, you can always find excuses. Maybe you don’t trust someone else to do as good a job, or don’t know if they’ll be happy with how good a job you do. It makes sense for there to be a gap between willingness-to-pay and willingness-to-accept. Just that people seem to have nonsensically high gaps.

Buying vs Getting Bumped From Flights

This is an example Bee and I spotted in the Boston airport one Sunday in August.

First, imagine you’re buying flights and there’s a flight at a much more convenient time, saving you basically a day of wasted travel time, but it costs $800 more. Almost everyone (in my socio-economic circles) chooses to save the $800.

Then you get to the gate for your flight and the flight is overbooked and they’re asking for volunteers to be bumped to a flight that’s 8 hours later and they’ll pay you $800 in actual money, not those scammy airline vouchers. Almost everyone chooses to stay on the flight they have (we checked!).

This means people are probably being irrational, either to save the $800 in the first case or to not take the $800 in the second case!

Can we rationalize this one? Easily. An unexpected 8-hour travel delay may be much worse than a similar delay you can plan for. But plenty of people are plainly being inconsistent/irrational in these situations.

Shut Up And Guess

Scott Alexander has a fun example from medical school in which med students persist in using a suboptimal strategy on a multiple-choice test because the feeling of losing points stings more than the feeling of gaining points salves. See also a fascinating paper on Guessing and Gambling.

You have to twist yourself into especially tangled knots to rationalize this one.

Decluttering

I personally very much suffer from the endowment effect bias and I try to consciously override it. When cleaning house and it feels hard to throw something away, I can sometimes snap myself out of it by reframing the question: “What would I pay to acquire this? Oh, right, zero dollars. So my loss aversion is irrational and this is zero skin off my nose to throw away!”

Beeminder

What if Beeminder is telling me to, I don’t know, publish a blog post and I’m staring bleary-eyed at a pile of notes and I really don’t want to? But also I’m really averse to being charged money, and so I get the dang post out the door? Is that not fighting irrationality with irrationality, for the greater good?

Nope, we’re doubling down.

In part 2 we’ll make our case for fighting irrationality with rationality instead. (Spoiler: that still means using Beeminder.)

PS: And, no, loss aversion has not been debunked.

Footnotes

[1] In terms of lifechangingness, five million dollars is not that different from one million dollars. Econ-nerd version: You have concave utility for money. Equivalently: You are risk-averse.

Image credit: Faire Soule-Reeves and Wikipedia