As you likely know if you’ve ever beeminded your weight, you can add a moving average line on top of your data on your Beeminder graph. You can also add a so-called aura around your datapoints. The idea is to see trends in your data without being distracted by daily fluctuations, particularly when data is coming from inherently unpredictable (aka noisy) sources like your weight.

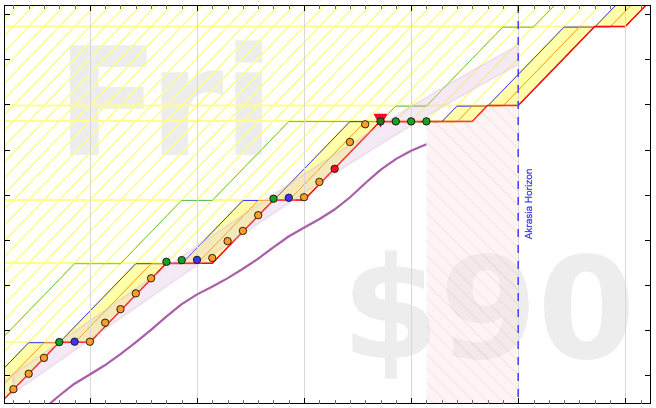

Until recently both the moving average and the aura have been somewhat wrong and ugly. In particular, the moving average line (MAV) used an exponentially weighted average of past datapoints, aka Exponential smoothing. Doing that has nice properties and is very simple to implement but it means the smoothed line lags behind the actual data, as if the line got shifted to the right (hover over the image if that seems backwards). If you turned on the moving average for a monotonic graph like a do-more — not that it makes sense to do that for a do-more goal — then you’d see the moving average line was very visibly offset from the actual datapoints. Like this:

(Side note: This offset is billed as a feature in The Hacker’s Diet. We talk about it in a previous blog post about whether you should beemind the moving average. We’ve mostly convinced ourselves that all the nice properties of exponential smoothing touted in The Hacker’s Diet still apply to our non-laggy smoothing. Namely, making your weight go down still pulls the moving average down. But feel free to debate this with us if you’re attached to the Hacker’s Diet scheme.)

Moving on from the moving average to the aura, the idea is to visualize a coarse envelope around the entirety of your data. The aura shows how variable your data is — indicated by the thickness of the aura — as well as what direction it’s heading. The second reason to show the aura is to provide a crude projection of how your data would progress a week into the future. Until recently the aura used a polynomial (of third degree or lower) that best fit the visible portion of the data. However, since Beeminder goals usually have a wide range of data trends (variations based on different seasons, motivational levels, life circumstances, etc.) such a low-degree polynomial approximation often failed (sometimes miserably) to achieve either of these desiderata. In the following example it’s doing fine at showing the data’s variation, not so fine at projecting the trend forward:

For all those reasons, we recently revamped both the MAV and the aura. Here’s how they work!

The algorithms are based on filtering methods in signal processing. In particular, there are two types of commonly used digital filters, Finite-Impulse-Response (FIR) and Infinite-Impulse-Response (IIR) filters. FIR filters are akin to moving average filters with a finite window, commonly used, for example, in image filters such as blurring. IIR filters are closer to the exponential filter mentioned above, belonging to a more general class of recursive filters. For IIR, incoming data is passed through a discrete dynamical system taking past and present values of both the data and the filter states to compute the next filtered datapoint. Both of these alternatives have pros and cons with respect to computational efficiency and accuracy, but it turned out that the faster IIR filters are better for Beeminder’s use cases.

In signal processing, such filters are characterized by their frequency response, which is how much of various frequency components of the original signal they let through to their output, and how much delay they introduce to each of these frequencies. A useful analogy is with equalizers in sound systems. You can increase or decrease high, low, or middle range frequencies in a sound signal to eliminate noise. That is in fact very informative for what we do, since both the MAV and the aura should be obtained by eliminating high-frequency changes (daily fluctuations) and show low frequency components (week-to-week or month-to-month trends). Consequently, what we need are so-called low-pass filters, which allow low frequency components in the data signal and block high frequency components.

Low-pass filters are parameterized primarily by their cutoff frequency, which is the frequency threshold beyond which they don’t let any components through. In our updated algorithms, we chose a cutoff frequency such that data components which fluctuate within a 20-day window are suppressed. For the aura, we chose a larger fluctuation period (hence a smaller cutoff frequency) of 20 days to 500 days, dynamically chosen with the number of visible datapoints to optimize its visual appearance and utility. The result is what you see in the following before/after shots:

There were key technical issues we had to address in getting these graphs. The first fundamental problem is associated with the fact that both FIR and IIR filters introduce what is called a “group delay” on the input, such that the output signal always lags behind the input signal. This was precisely the problem with the original MAV line. One cannot eliminate this issue in signal processing applications where your output cannot depend on future data (i.e. they have to be causal), but since Beeminder has all your data (all your data belong to us!) we do not have to abide by causality and can apply the same filter once forwards (introducing a delay of +D) and once backwards (with a delay of -D), resulting in an output signal with no delay! This is how we make the MAV line much more closely follow your data compared to its performance before.

The second fundamental issue that plagued the use of these filters was “initialization”.

Since IIR filters have internal state, they need to be properly initialized to make sure that the initial parts of the output also closely mirror the input.

The need to also apply the filter backwards makes this more challenging since the last part of the data becomes the “initial state” for this backwards application.

Our solution to this problem was to first shift all datapoints with an affine function so that they start and end at zero

(i.e. nd[i] = d[i] - (d[0]+i/N*(d[N]-d[0]))),

and add a sufficient number of zeroes to the end of this data sequence to ensure a smooth ending for the filtered signal.

Following filtering (i.e. ndf = iir_filter(nd)), original data values were recovered by applying the inverse of this adjustment

(i.e. df[i] = ndf[i] + (d[0]+i/N*(d[N]-d[0]))).

Finally, a small but important requirement for the applicability of these filters is that data needs to be uniformly sampled (i.e. one datapoint every day), which is of course not always true for Beeminder goals. We remedy this small problem by using linear interpolation to fill in the gaps between successive datapoints which are separated by more than one day.

And there you have it! Actually you’ve had it since May and we just took this long to get the blog post together. But now you know the rest of the story.